Explore science

Our research center is dedicated to anticipating challenges and supporting companies to introduce new products and processes. In addition to creating knowledge through our own research papers and publications, our scientific approach gives us a clear view of the issues to address in the fields of AI and data science. We share this knowledge with our employees, our academic or business partners and our clients through conferences, workshops and training courses. This is how we ensure expertise in the scientific topics we address and the technologies we use in our research projects and with our clients. Through our research center, we disseminate digital scientific knowledge with our teams before sharing it with our customers.

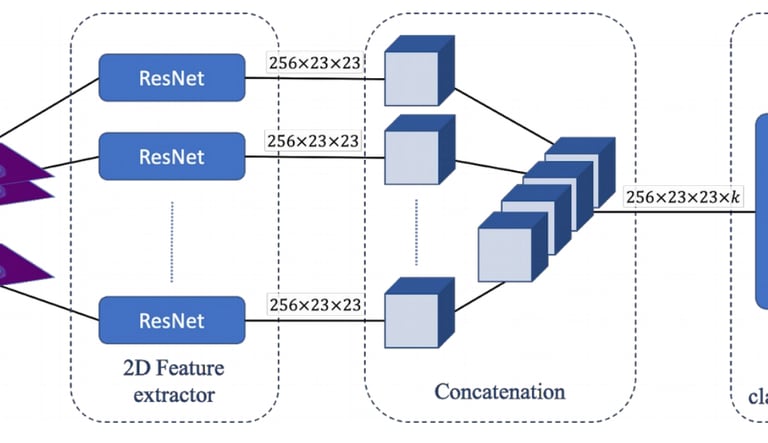

A deep learning architecture associating a 2D feature extractor to a 3D CNN predictor. It takes advantage of 2D pre-trained model to extract feature maps out of 2D PET slices. And apply a 3D CNN on top of the concatenation of the previously extracted feature maps to compute patient-wise predictions. The proposed pipeline outperforms PET radiomics approaches on the prediction of loco-regional recurrences and overall survival.

Traditionally, nuclear physicians manually track tumors, focusing on the five largest ones (PERCIST criteria), which is both time-consuming and imprecise. Automated tumor tracking will allow real-time and precise matching of the numerous metastatic lesions across scans. The proposed tumor tracking method by image registration, though just as a baseline, performs well on the annotated dataset, composed of simple cases.

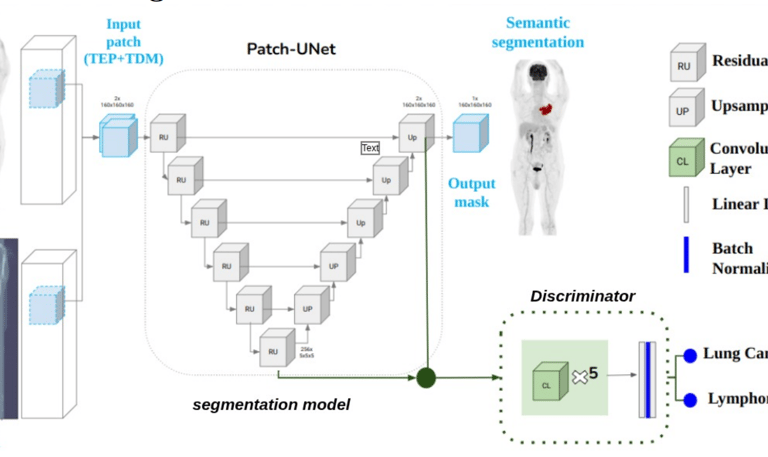

Integrating datasets from different cancer types can improve diagnostic accuracy, as deep learning models tend to generalise better with more data. However, this benefit is often limited by performance variance caused by biases, such as under- or over-representation of certain diseases. We propose a cancer-type-invariant model capable of segmenting tumours from both lymphoma and lung cancer, irrespective of their frequency or representation bias.

NOVA AID

Better, Faster, Stronger Biomarkers

© 2025. All rights reserved.